딥러닝 시작하기

- 딥러닝이란?

- 인간의 신경망을 모방하여 학습하고 예측하는 기술

- 대량의 데이터에서 복잡한 패턴이나 규칙을 찾아내는 능력이 뛰어남

- 머신러닝에 비해 조금 더 유연한 사고를 한다

- 인간의 뉴런 == 딥러닝에서 선형모델

- 주로 영상, 음성 이미지 처리에 사용된다

- tensorflow

- 구글이 만든 딥러닝을 위한 라이브러리

- keras

- tensorflow 위에서 동작하는 라이브러리로 사용자 친화적 라이브러리

In [1]:

# tensorflow 버전 확인 import tensorflow as tf print(tf.__version__) # 2.13.0 # 설치버전 확인 이유: 프로젝트 진행시 오픈소스 가져다 쓸 때 충돌방지

2.13.0

In [2]:

# 리눅스기반의 코랩 (리눅스명령어 그대로 사용가능) # 리눅스 명령어를 사용하여 현재 작업 디렉토리 확인 !pwd # print work directory

/content

In [3]:

# 현재 작업 디렉토리의 파일 목록 조회 !ls

drive sample_data

In [4]:

# 작업 디렉토리 변경 # %cd (change directory) %cd "/content/drive/MyDrive/Colab Notebooks/DeepLearning"

/content/drive/MyDrive/Colab Notebooks/DeepLearning

In [5]:

# 다시 현재 폴더 경로 확인 !pwd

/content/drive/MyDrive/Colab Notebooks/DeepLearning

목표

- 공부시간에 따른 수학 성적을 예측하는 회귀모델을 만들어보자

- keras 활용해서 신경망 구성하는 방법을 연습해보자

In [6]:

import numpy as np import pandas as pd import matplotlib.pyplot as plt

In [7]:

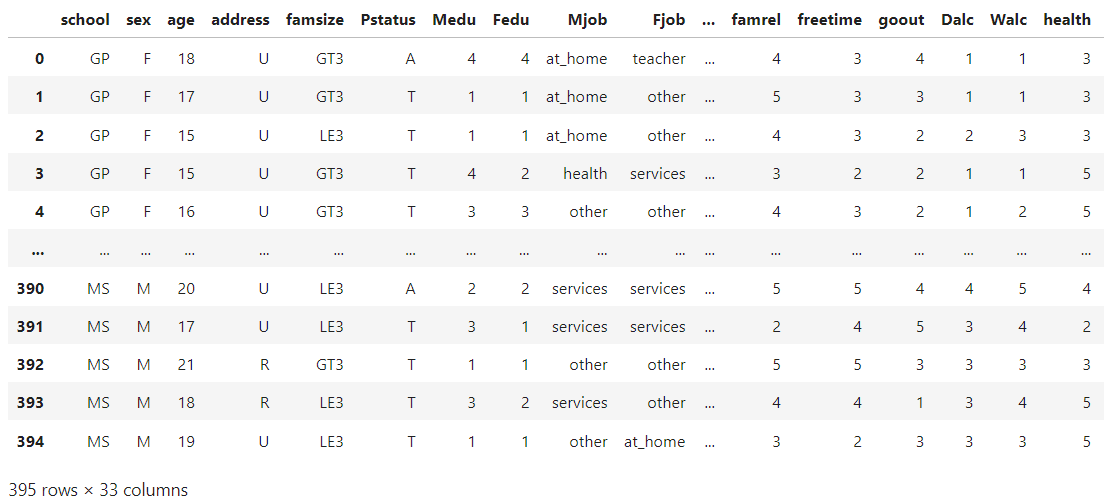

# 데이터 불러오기 data = pd.read_csv('./data/student-mat.csv', delimiter=';') data

Out[7]:

In [8]:

# 데이터 정보확인 data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 395 entries, 0 to 394

Data columns (total 33 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 school 395 non-null object

1 sex 395 non-null object

2 age 395 non-null int64

3 address 395 non-null object

4 famsize 395 non-null object

5 Pstatus 395 non-null object

6 Medu 395 non-null int64

7 Fedu 395 non-null int64

8 Mjob 395 non-null object

9 Fjob 395 non-null object

10 reason 395 non-null object

11 guardian 395 non-null object

12 traveltime 395 non-null int64

13 studytime 395 non-null int64

14 failures 395 non-null int64

15 schoolsup 395 non-null object

16 famsup 395 non-null object

17 paid 395 non-null object

18 activities 395 non-null object

19 nursery 395 non-null object

20 higher 395 non-null object

21 internet 395 non-null object

22 romantic 395 non-null object

23 famrel 395 non-null int64

24 freetime 395 non-null int64

25 goout 395 non-null int64

26 Dalc 395 non-null int64

27 Walc 395 non-null int64

28 health 395 non-null int64

29 absences 395 non-null int64

30 G1 395 non-null int64

31 G2 395 non-null int64

32 G3 395 non-null int64

dtypes: int64(16), object(17)

memory usage: 102.0+ KB

문제와 답으로 분리

- 입력특성: 1개(studytime)

- 정답: G3

In [9]:

# 문제데이터 (X) X = data['studytime'] # 정답데이터 (y) y = data['G3']

In [10]:

from sklearn.model_selection import train_test_split

In [11]:

# train,test 분리 X_train, X_test, y_train, y_test = train_test_split(X,y, random_state=915, test_size = 0.2)

In [12]:

# 크기확인 X_train.shape, y_train.shape

Out[12]:

((316,), (316,))In [13]:

X_test.shape, y_test.shape

Out[13]:

((79,), (79,))

머신러닝 모델과 딥러닝 모델의 차이점

- 머신러닝

- 완제품 장난감: 팔정도만 움직일 수 있음(하이퍼파라미터만 조절이 가능한 것)

- 모델생성(완성된 객체 사용) -> 모델학습 -> 모델예측 -> 모델평가

- 딥러닝

- 레고블럭: 다양한 구성이 가능

- 모델생성(모델을 직접 구성) -> 모델학습 -> 모델예측 -> 모델평가

머신러닝 모델링(선형회귀모델)

In [14]:

from sklearn.linear_model import LinearRegression # 선형회귀

from sklearn.metrics import mean_squared_error # 회귀모델의 평가지표 도구

In [15]:

from sklearn import linear_model

# 1. 모델 생성

linear_model = LinearRegression()

# 2. 모델 학습 (학습용 문제, 학습용 정답)

linear_model.fit(X_train.values.reshape(-1,1), y_train)

# 3. 모델 예측 (테스트용 문제)

linear_pre = linear_model.predict(X_test.values.reshape(-1,1))

# 4. 모델 평가 (실제값, 예측값)

mean_squared_error(y_test, linear_pre)

Out[15]:

24.058078771701606In [16]:

X_train.values.reshape(-1,1)

Out[16]:

array([[1],

[1],

[1],

[2],

[1],

[4],

[1],

[2],

[2],

[1],

[1],

[3],

[2],

[1],

..................

[2],

[2],

[2],

[2],

[2],

[2],

[1],

[4],

[3],

[2],

[1]])In [17]:

# 머신러닝 모델은 입력특성을 2차원으로 받는다 # 1차원 -> 2차원 변경 X_train.values.reshape(-1,1)

Out[17]:

array([[1],

[1],

[1],

[2],

[1],

[4],

[1],

[2],

[2],

[1],

[1],

[3],

[2],

[1],

[2],

[2],

[2],

[2],

[2],

[3],

[2],

[1],

[2],

[3],

[4],

[3],

[2],

[1],

[1],

[2],

[2],

....................

[2],

[1],

[4],

[2],

[2],

[2],

[2],

[2],

[2],

[1],

[4],

[3],

[2],

[1]])딥러닝 모델링(모델 구조 설계)

In [18]:

# 딥러닝 모델 도구 불러오기

# 텐서플로우 안에 있는 keras

from tensorflow.keras.models import Sequential

# 모델의 뼈대

# 신경망의 구성요소 (하나씩 조립)

from tensorflow.keras.layers import InputLayer, Dense, Activation

# InputLayer : 입력

# Dense : 밀집도

# Activation : 활성화함수 (인간을 모방하기 위해서 사용하는 함수)

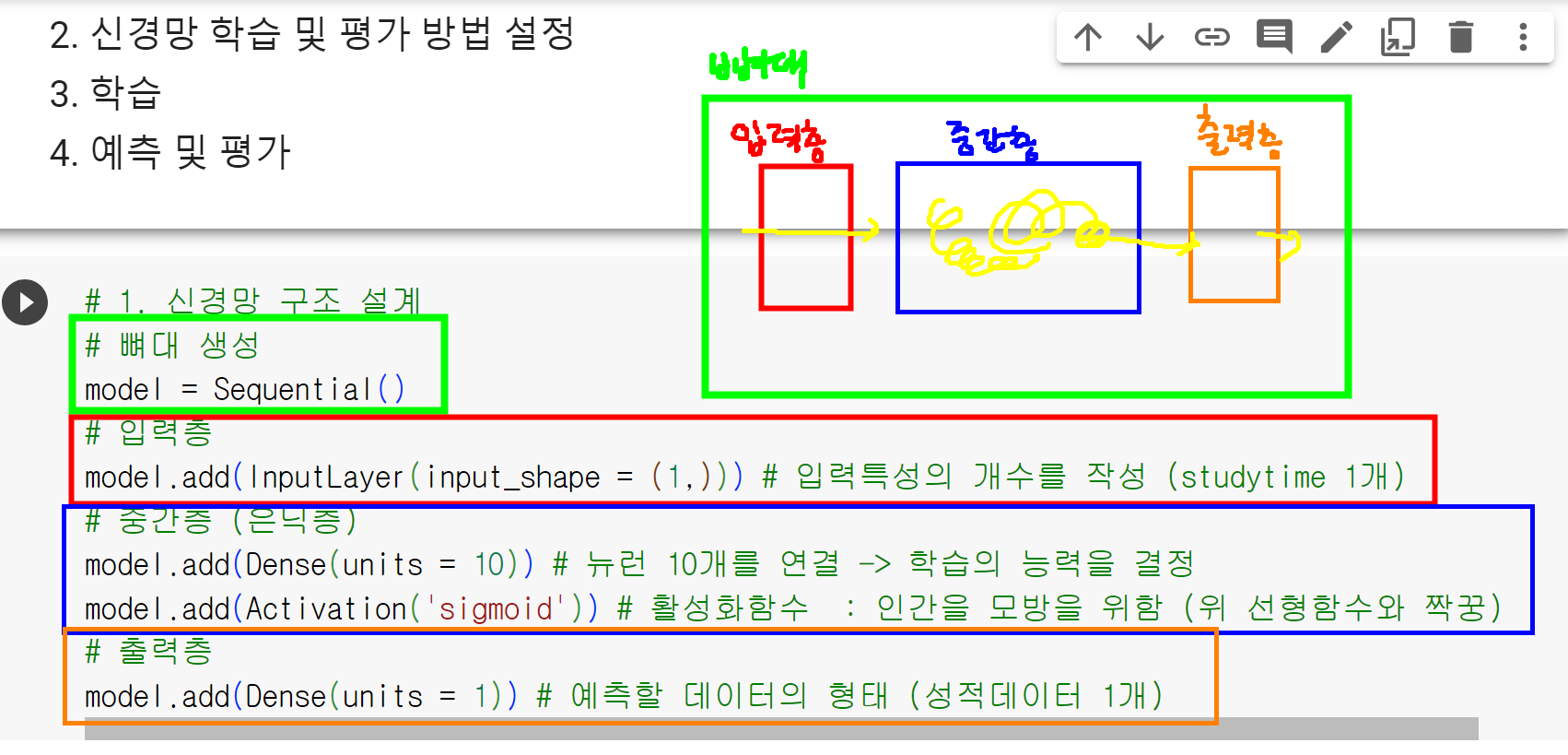

딥러닝모델 설계 순서

- 신경망 구조 설계

- 신경망 학습 및 평가 방법 설정

- 학습

- 예측 및 평가

In [19]:

# 1. 신경망 구조 설계

# 뼈대 생성

model = Sequential()

# 입력층

model.add(InputLayer(input_shape = (1,))) # 입력특성의 개수를 작성(studytime 1개)

# 중간층 (은닉층) model.add(Dense(units = 10)) # 뉴런 10개를 연결 -> 학습의 능력을 결정 model.add(Activation('sigmoid')) # 활성화함수 : 인간을 모방을 위함 (위 선형함수와 짝꿍) # 출력층 model.add(Dense(units = 1)) # 예측할 데이터의 형태 (성적데이터 1개)

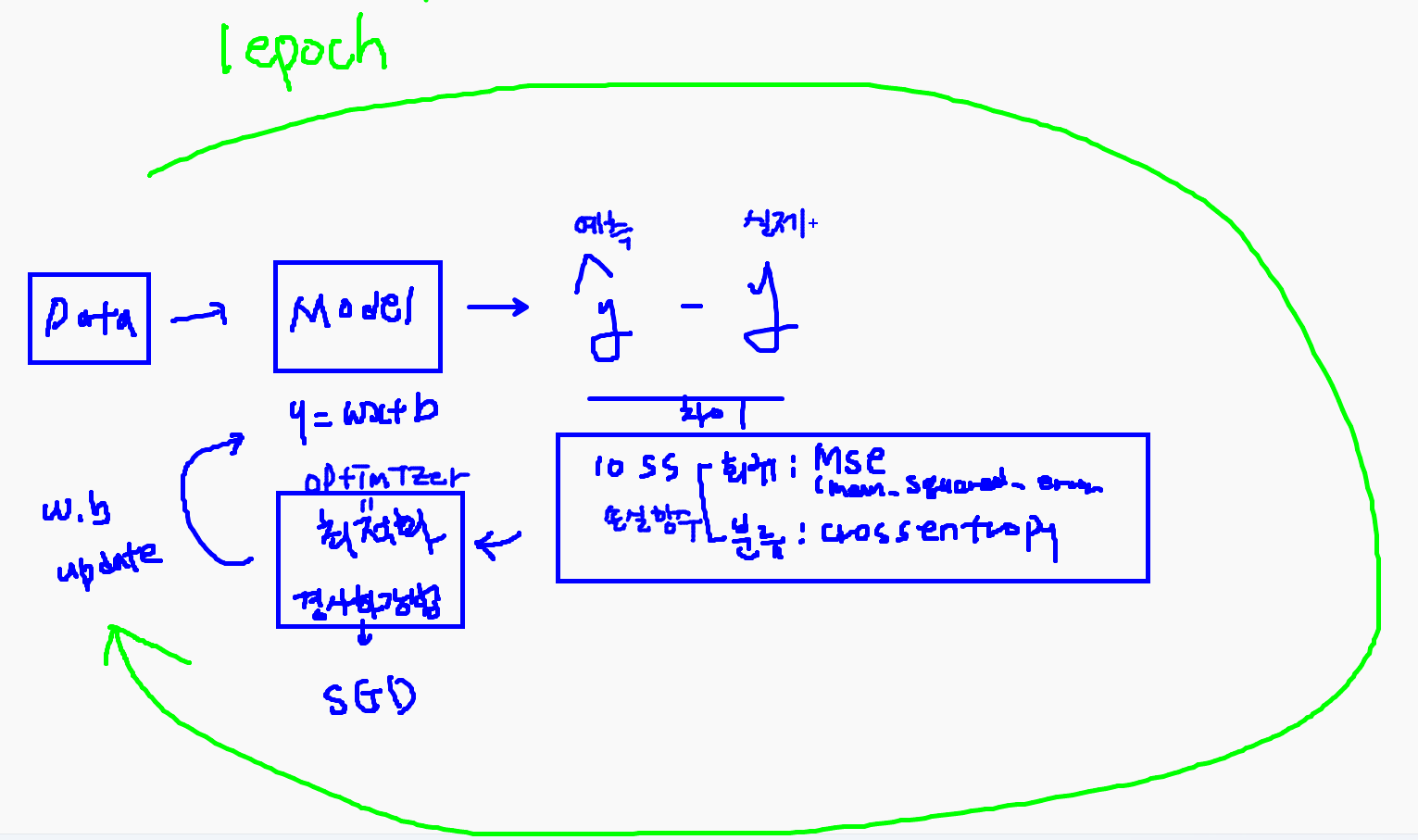

In [20]:

# 2. 학습 및 평가 방법 설정

# 딥러닝 모델은 학습법과 평가법을 지정해주어야한다

model.compile(loss = 'mean_squared_error', # 모델의 잘못된 정도(오차) 측정 알고리즘

optimizer = 'SGD', # 모델의 w,b 값을 최적화하는 알고리즘

metrics = ['mse'])

In [21]:

# 3. 모델학습 (교차검증 => 일반화를 위해)

h1 = model.fit(X_train, y_train, validation_split=0.2, # 교차검증을 위한 데이터 남겨두기

epochs = 20) # 모델의 최적화 (업데이트 횟수, 반복횟수)

# h1 변수에 담는 이유: 로그를 출력하여 패턴을 확인하기 위함

Epoch 1/20

8/8 [==============================] - 1s 46ms/step - loss: 73.9679 - mse: 73.9679 - val_loss: 37.1645 - val_mse: 37.1645

Epoch 2/20

8/8 [==============================] - 0s 18ms/step - loss: 26.2355 - mse: 26.2355 - val_loss: 23.5143 - val_mse: 23.5143

Epoch 3/20

8/8 [==============================] - 0s 9ms/step - loss: 20.0006 - mse: 20.0006 - val_loss: 22.5806 - val_mse: 22.5806

Epoch 4/20

8/8 [==============================] - 0s 10ms/step - loss: 19.4507 - mse: 19.4507 - val_loss: 22.5847 - val_mse: 22.5847

Epoch 5/20

8/8 [==============================] - 0s 8ms/step - loss: 19.4473 - mse: 19.4473 - val_loss: 22.5959 - val_mse: 22.5959

Epoch 6/20

8/8 [==============================] - 0s 10ms/step - loss: 19.4833 - mse: 19.4833 - val_loss: 22.5863 - val_mse: 22.5863

Epoch 7/20

8/8 [==============================] - 0s 16ms/step - loss: 19.5352 - mse: 19.5352 - val_loss: 22.5968 - val_mse: 22.5968

Epoch 8/20

8/8 [==============================] - 0s 10ms/step - loss: 19.4568 - mse: 19.4568 - val_loss: 22.6164 - val_mse: 22.6164

Epoch 9/20

8/8 [==============================] - 0s 15ms/step - loss: 19.4558 - mse: 19.4558 - val_loss: 22.6185 - val_mse: 22.6185

Epoch 10/20

8/8 [==============================] - 0s 12ms/step - loss: 19.4986 - mse: 19.4986 - val_loss: 22.6194 - val_mse: 22.6194

Epoch 11/20

8/8 [==============================] - 0s 10ms/step - loss: 19.5476 - mse: 19.5476 - val_loss: 22.6082 - val_mse: 22.6082

Epoch 12/20

8/8 [==============================] - 0s 9ms/step - loss: 19.4971 - mse: 19.4971 - val_loss: 22.6296 - val_mse: 22.6296

Epoch 13/20

8/8 [==============================] - 0s 12ms/step - loss: 19.5329 - mse: 19.5329 - val_loss: 22.6756 - val_mse: 22.6756

Epoch 14/20

8/8 [==============================] - 0s 12ms/step - loss: 19.4227 - mse: 19.4227 - val_loss: 22.6311 - val_mse: 22.6311

Epoch 15/20

8/8 [==============================] - 0s 13ms/step - loss: 19.4297 - mse: 19.4297 - val_loss: 22.6574 - val_mse: 22.6574

Epoch 16/20

8/8 [==============================] - 0s 10ms/step - loss: 19.4273 - mse: 19.4273 - val_loss: 22.6372 - val_mse: 22.6372

Epoch 17/20

8/8 [==============================] - 0s 12ms/step - loss: 19.4405 - mse: 19.4405 - val_loss: 22.6500 - val_mse: 22.6500

Epoch 18/20

8/8 [==============================] - 0s 9ms/step - loss: 19.4294 - mse: 19.4294 - val_loss: 22.7550 - val_mse: 22.7550

Epoch 19/20

8/8 [==============================] - 0s 17ms/step - loss: 19.4714 - mse: 19.4714 - val_loss: 22.6640 - val_mse: 22.6640

Epoch 20/20

8/8 [==============================] - 0s 17ms/step - loss: 19.4796 - mse: 19.4796 - val_loss: 22.6591 - val_mse: 22.6591

In [22]:

# 4. 모델 평가 model.evaluate(X_test, y_test)

3/3 [==============================] - 0s 5ms/step - loss: 24.2153 - mse: 24.2153

Out[22]:

[24.21527862548828, 24.21527862548828]In [23]:

# 모델학습 로그출력 h1.history

In [24]:

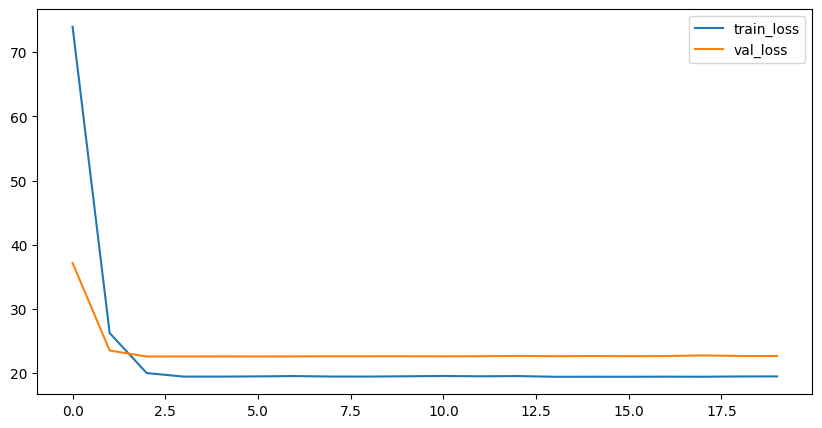

# 모델학습 시각화

plt.figure(figsize = (10,5))

plt.plot(h1.history['loss'], label = 'train_loss')

plt.plot(h1.history['val_loss'], label = 'val_loss')

plt.legend()# 범례

plt.show()

In [25]:

data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 395 entries, 0 to 394

Data columns (total 33 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 school 395 non-null object

1 sex 395 non-null object

2 age 395 non-null int64

3 address 395 non-null object

4 famsize 395 non-null object

5 Pstatus 395 non-null object

6 Medu 395 non-null int64

7 Fedu 395 non-null int64

8 Mjob 395 non-null object

9 Fjob 395 non-null object

10 reason 395 non-null object

11 guardian 395 non-null object

12 traveltime 395 non-null int64

13 studytime 395 non-null int64

14 failures 395 non-null int64

15 schoolsup 395 non-null object

16 famsup 395 non-null object

17 paid 395 non-null object

18 activities 395 non-null object

19 nursery 395 non-null object

20 higher 395 non-null object

21 internet 395 non-null object

22 romantic 395 non-null object

23 famrel 395 non-null int64

24 freetime 395 non-null int64

25 goout 395 non-null int64

26 Dalc 395 non-null int64

27 Walc 395 non-null int64

28 health 395 non-null int64

29 absences 395 non-null int64

30 G1 395 non-null int64

31 G2 395 non-null int64

32 G3 395 non-null int64

dtypes: int64(16), object(17)

memory usage: 102.0+ KB

4개의 입력특성을 골라 학습시켜보자

In [26]:

X = data[['studytime','freetime','traveltime','health']] y = data['G3']

In [27]:

# train,test 분리 X_train,X_test,y_train,y_test = train_test_split(X,y,test_size = 0.2, random_state = 918)

In [28]:

# 데이터크기 X_train.shape, y_train.shape, X_test.shape, y_test.shape

Out[28]:

((316, 4), (316,), (79, 4), (79,))In [29]:

# 1. 신경망 구조설계

# 뼈대

model2 = Sequential()

# 입력층 input_shape = 나의 특성수

model2.add(InputLayer(input_shape = (4,))) # 입력특성 4개

# 중간층

model2.add(Dense(units = 10))

model2.add(Activation ('sigmoid'))

# 출력층

model2.add(Dense (units = 1))

In [30]:

# 2. 학습방법과 평가방법 설정

model2.compile( loss = 'mean_squared_error',

optimizer = 'SGD',

metrics = ['mse'])

In [31]:

# 3. 학습

h2 = model2.fit(X_train,y_train,validation_split=0.2, epochs = 20)

Epoch 1/20

8/8 [==============================] - 2s 85ms/step - loss: 75.0548 - mse: 75.0548 - val_loss: 44.7557 - val_mse: 44.7557

Epoch 2/20

8/8 [==============================] - 0s 23ms/step - loss: 27.2831 - mse: 27.2831 - val_loss: 28.5800 - val_mse: 28.5800

Epoch 3/20

8/8 [==============================] - 0s 23ms/step - loss: 21.5662 - mse: 21.5662 - val_loss: 26.0360 - val_mse: 26.0360

Epoch 4/20

8/8 [==============================] - 0s 20ms/step - loss: 20.8780 - mse: 20.8780 - val_loss: 25.5468 - val_mse: 25.5468

Epoch 5/20

8/8 [==============================] - 0s 24ms/step - loss: 20.8263 - mse: 20.8263 - val_loss: 25.2391 - val_mse: 25.2391

Epoch 6/20

8/8 [==============================] - 0s 20ms/step - loss: 20.8114 - mse: 20.8114 - val_loss: 25.3522 - val_mse: 25.3522

Epoch 7/20

8/8 [==============================] - 0s 10ms/step - loss: 20.7372 - mse: 20.7372 - val_loss: 25.3165 - val_mse: 25.3165

Epoch 8/20

8/8 [==============================] - 0s 10ms/step - loss: 20.7467 - mse: 20.7467 - val_loss: 25.4160 - val_mse: 25.4160

Epoch 9/20

8/8 [==============================] - 0s 11ms/step - loss: 20.7539 - mse: 20.7539 - val_loss: 25.4508 - val_mse: 25.4508

Epoch 10/20

8/8 [==============================] - 0s 10ms/step - loss: 20.6559 - mse: 20.6559 - val_loss: 25.2578 - val_mse: 25.2578

Epoch 11/20

8/8 [==============================] - 0s 11ms/step - loss: 20.6398 - mse: 20.6398 - val_loss: 25.2272 - val_mse: 25.2272

Epoch 12/20

8/8 [==============================] - 0s 10ms/step - loss: 20.6571 - mse: 20.6571 - val_loss: 25.4038 - val_mse: 25.4038

Epoch 13/20

8/8 [==============================] - 0s 10ms/step - loss: 20.6461 - mse: 20.6461 - val_loss: 25.0518 - val_mse: 25.0518

Epoch 14/20

8/8 [==============================] - 0s 13ms/step - loss: 20.6930 - mse: 20.6930 - val_loss: 25.0887 - val_mse: 25.0887

Epoch 15/20

8/8 [==============================] - 0s 11ms/step - loss: 20.6173 - mse: 20.6173 - val_loss: 25.1529 - val_mse: 25.1529

Epoch 16/20

8/8 [==============================] - 0s 10ms/step - loss: 20.5983 - mse: 20.5983 - val_loss: 25.1557 - val_mse: 25.1557

Epoch 17/20

8/8 [==============================] - 0s 13ms/step - loss: 20.5612 - mse: 20.5612 - val_loss: 25.2016 - val_mse: 25.2016

Epoch 18/20

8/8 [==============================] - 0s 9ms/step - loss: 20.5401 - mse: 20.5401 - val_loss: 25.1513 - val_mse: 25.1513

Epoch 19/20

8/8 [==============================] - 0s 8ms/step - loss: 20.5418 - mse: 20.5418 - val_loss: 25.2365 - val_mse: 25.2365

Epoch 20/20

8/8 [==============================] - 0s 11ms/step - loss: 20.5815 - mse: 20.5815 - val_loss: 25.1426 - val_mse: 25.1426

In [32]:

# 3. 학습

h2 = model2.fit(X_train,y_train,validation_split=0.2, epochs = 20)

Epoch 1/20

8/8 [==============================] - 0s 20ms/step - loss: 20.3484 - mse: 20.3484 - val_loss: 25.2851 - val_mse: 25.2851

Epoch 2/20

8/8 [==============================] - 0s 10ms/step - loss: 20.4140 - mse: 20.4140 - val_loss: 25.2247 - val_mse: 25.2247

Epoch 3/20

8/8 [==============================] - 0s 13ms/step - loss: 20.3730 - mse: 20.3730 - val_loss: 25.5134 - val_mse: 25.5134

Epoch 4/20

8/8 [==============================] - 0s 10ms/step - loss: 20.3436 - mse: 20.3436 - val_loss: 25.4567 - val_mse: 25.4567

Epoch 5/20

8/8 [==============================] - 0s 11ms/step - loss: 20.2972 - mse: 20.2972 - val_loss: 25.5858 - val_mse: 25.5858

Epoch 6/20

8/8 [==============================] - 0s 11ms/step - loss: 20.2408 - mse: 20.2408 - val_loss: 25.2991 - val_mse: 25.2991

Epoch 7/20

8/8 [==============================] - 0s 11ms/step - loss: 20.1969 - mse: 20.1969 - val_loss: 25.7006 - val_mse: 25.7006

Epoch 8/20

8/8 [==============================] - 0s 15ms/step - loss: 20.2853 - mse: 20.2853 - val_loss: 25.8878 - val_mse: 25.8878

Epoch 9/20

8/8 [==============================] - 0s 12ms/step - loss: 20.2336 - mse: 20.2336 - val_loss: 25.8342 - val_mse: 25.8342

Epoch 10/20

8/8 [==============================] - 0s 12ms/step - loss: 20.3349 - mse: 20.3349 - val_loss: 25.2655 - val_mse: 25.2655

Epoch 11/20

8/8 [==============================] - 0s 19ms/step - loss: 20.2927 - mse: 20.2927 - val_loss: 25.6687 - val_mse: 25.6687

Epoch 12/20

8/8 [==============================] - 0s 18ms/step - loss: 20.2487 - mse: 20.2487 - val_loss: 25.9508 - val_mse: 25.9508

Epoch 13/20

8/8 [==============================] - 0s 12ms/step - loss: 20.2229 - mse: 20.2229 - val_loss: 25.8129 - val_mse: 25.8129

Epoch 14/20

8/8 [==============================] - 0s 11ms/step - loss: 20.3038 - mse: 20.3038 - val_loss: 25.7019 - val_mse: 25.7019

Epoch 15/20

8/8 [==============================] - 0s 20ms/step - loss: 20.1391 - mse: 20.1391 - val_loss: 25.4305 - val_mse: 25.4305

Epoch 16/20

8/8 [==============================] - 0s 12ms/step - loss: 20.1009 - mse: 20.1009 - val_loss: 25.4961 - val_mse: 25.4961

Epoch 17/20

8/8 [==============================] - 0s 12ms/step - loss: 20.2879 - mse: 20.2879 - val_loss: 25.8379 - val_mse: 25.8379

Epoch 18/20

8/8 [==============================] - 0s 10ms/step - loss: 20.1252 - mse: 20.1252 - val_loss: 25.4275 - val_mse: 25.4275

Epoch 19/20

8/8 [==============================] - 0s 13ms/step - loss: 20.1481 - mse: 20.1481 - val_loss: 25.4209 - val_mse: 25.4209

Epoch 20/20

8/8 [==============================] - 0s 19ms/step - loss: 20.0994 - mse: 20.0994 - val_loss: 25.6060 - val_mse: 25.6060

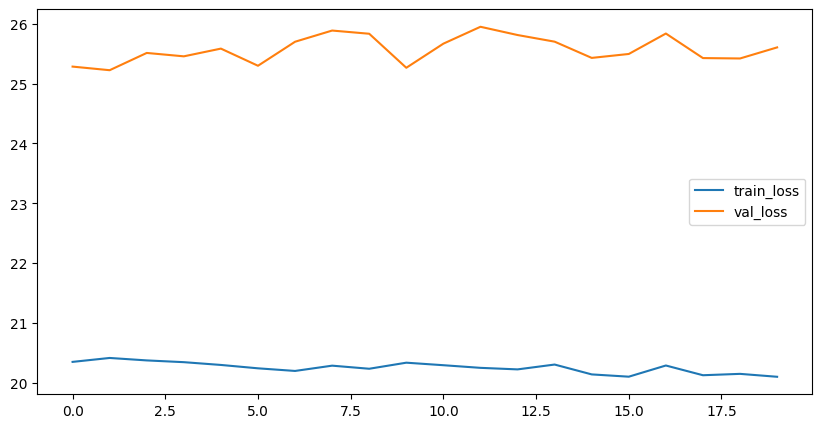

In [33]:

# 5. 시각화

plt.figure(figsize = (10,5))

plt.plot(h2.history['loss'], label = 'train_loss')

plt.plot(h2.history['val_loss'], label = 'val_loss')

plt.legend()# 범례

plt.show()

'Study > DeepLearning' 카테고리의 다른 글

| [DeepLearning] 다중분류 / 손글씨 데이터 분류 실습 (0) | 2023.09.27 |

|---|---|

| [DeepLearning] 이진분류 (유방암 데이터 분류 실습) (0) | 2023.09.26 |